Seriously, we don’t.

I was asked on Discord today why some languages require semicolons and others don’t, and this is one of those surprisingly deep questions that to the best of my knowledge hasn’t been answered very well elsewhere:

- Why do some languages end statements with semicolons?

- Why do other languages explicitly not end statements with semicolons?

- Why do some languages require them but it seems like the compiler could just “figure it out,” since it seems to know when you’ve forgotten them?

- Why are they optional in some languages?

- And, of all things, why the weird shape that is the semicolon? Why not

|or$or even★instead?

So let’s talk about semicolons, and try to answer this as well as we can.

To really answer this well, we’re going to have to get into our time machine, and go back to the the early days of computing, when the computers were huge and slow and expensive, and getting a computer to do anything at all was really, really hard. Ready? Here we go…!

A Long, Long Time Ago, in a University Far, Far Away…

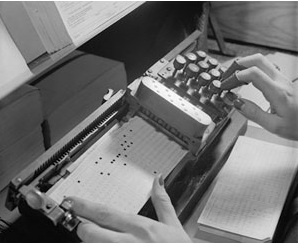

Once upon a time, computers were programmed using flip switches and punch cards. We’ll skip the flip-switch era and start in the 1950s with big IBM mainframe computers that used punch cards.

In those days, you’d program your computer using this:

You’d make your program on a stack of cards, and then you’d feed the program into a little card reader attached to the computer that looked like this:

So how did that actually work? How would you use a punch card to program the computer?

Each of the three major languages of the late 1950s — assembly, Fortran, and COBOL — had something in common: They were statement-oriented. You would describe your program in steps, or statements, like “Add this number to that number,” or “If this number is nonzero, go to step 3.” Your statements had to be short and simple, because the computer simply wasn’t smart enough to handle much more than that. So each card was a statement. You’d punch the holes for the letters and numbers you wanted the computer to understand in that statement. This card represents X = Y + Z, and that card represents PRINT X. Nothing too complicated about that conceptually. You’d put the cards in the order you wanted the program to run in, and the computer would read them in, and then do them in the order given.

(An aside on punch cards: Why use these in the first place? Why not just use keyboards? The answer was that punch cards were cheap, and keyboards were expensive! Punch card readers had been invented in the late 1800s for controlling other machinery, so by the 1950s, they were a comparatively “cheap” technology — a far less expensive way to get data into a machine than correctly manufacturing a thing with hundreds of electromechanical switches on it. Making a decent mechanical typewriter wasn’t too hard, but making one that used a hundred reliable electronic switches? The manufacturing sector really hadn’t gotten the price on that low enough to be usable.)

So for the languages of this era, it’s important to understand that they not only didn’t use semicolons, they didn’t use anything. Your statement didn’t end at the end of the “line” — it ended at the end of the card, whether you wanted it to or not! When writing out the program by hand, you’d write it in “lines,” and if you had the computer print your program back to you — on the big printer, because it didn’t have a screen — the print would come out in “lines,” but really, programmers of the era thought in cards. The way to separate each statement in your program was a physical break.

(courtesy Hackaday)

Importantly, the position on the card mattered. If you were punching a card, you had to make sure that you put the letters in the right columns, or the computer wouldn’t recognize your input. In Fortran and assembly, for example, the statement (or instruction) had to start at position 8 on each card. (Today, we’d call that indentation.) Positions 0-7 on the card were reserved for naming that line (in some languages) or just for comments (in others). Today, we’d call that significant whitespace, but in those days, it was just simply a rule you learned as part of learning the computer: Things in this position on the card meant this, things in that position on the card meant that.

We still have vestiges of that today: Some assemblers still require that labels always be left-aligned, always smashed up against the left column of the input, while the instructions must always be indented, typically by one tab stop (8 characters!).

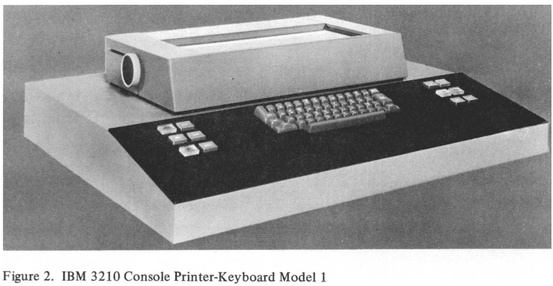

Eventually, keyboards were added to those early computers, and at that point, the transition from cards to lines was a pretty natural one: After all, the printer already printed back the cards of your program using lines, so it made sense to start using lines for input as well, and the languages really didn’t have to change. Everything was line-oriented, because there were no screens: You’d type a line of keystrokes on the keyboard, and the computer would then print back to you its response on the printer attached above the keyboard.

How did this work? You’d be in an “interactive session,” where you’d type commands to the computer to explain how you wanted to create your program:

> E10 (edit line 10)

IF (X .LT. 10) THEN (the replacement text for line 10)

OK (computer's response)

> I13 (insert a new line after line 13)

WRITE 'X IS INVALID' (the new line of text for line 13)

OK (computer's response)

> P10 (print line 10)

IF (X .LT. 10) THEN (computer's response)

> And so on. It might seem tedious, but it was a big step forward compared to punch cards. But importantly, the languages still are designed around one line at a time. And as long as you only ever have one line, you can’t be wrong about how it ends…

The Algol Project

“Everybody” knew in the late 1950s that the programming languages at the time were clunky. Fortran was just “upgraded assembly language,” and COBOL invested so much effort on procedure and paperwork that you could write twenty lines of punch cards that did absolutely nothing.

By accident, a group of researchers led by John McCarthy at MIT had just “discovered” a weird new programming language they were calling Lisp, for list processing, and it didn’t look anything like COBOL or Fortran, but it wasn’t anything you’d write big commercial software in. The world needed an alternative to COBOL and Fortran, and a lot of money was on the line, so a group of the brightest minds convened in 1958 and…

…formed a committee to talk about it.

In all seriousness, the people who created Algol were utterly brilliant. McCarthy was there, as were John Backus and Peter Naur, and two of the earliest implementations were made by Tony Hoare and Edsger Djikstra. We owe a lot to them, and every modern programming language can be traced directly to the Algol 60 group’s work in some way.

Tony Hoare and Edsger Djikstra, two of its first implementors;

and John Backus and Peter Naur, whose math helped make Algol possible.

(all photos courtesy Wikipedia)

One of the important realizations of the introduction of keyboards was that programs weren’t really a sequence of independent chunks anymore. Inside the computer, the data from each “card” was stored right up against the data from the next “card,” so it had become natural to think of it as a whole program instead of as truly independent statements. Each line of the program was merely separated by a marker, a single byte in memory indicating where it ended. Some computers used a 000 byte for this, and others used 377, and there were others that had a special byte like 0A for it. There wasn’t much consistency between representations, but the important thing was that the program looked different when you saw it in memory: It wasn’t lines. It was just a continuous sequence of characters with separator marks in between:

... |IF (X .LT. 10) THEN|WRITE 'X IS INVALID'|X = 0|GOTO 10|END| ...This realization, that a program was just a looong single string with separator marks in it allowed for a simple question to be asked whose answer had profound consequences: What if the separator mark was just another character? What if the “line separator” was treated by the language like a space, and something else was used instead to mark the ends of statements?

That realization formed one of the foundations of the work by the Algol 60 committee: That software could be free-formatted — that spaces and lines didn’t and shouldn’t matter as much as the printable characters would.

Another critical part of the story of the semicolon involves a philosophical shift over what a statement constituted in the first place. In the early days, a statement was a primitive operation, or something only slightly more complex, like X = Y + Z. But it was natural to conceive of it as being able to be bigger: A procedure, or subroutine, or what we’d today more often call a function or a method, was a logical unit of computation that could be treated like a “more advanced statement.” So instead of using lines to separate content, why not describe things to the computer following the same patterns that English uses?* Conjunctions like “and” and “or” to combine independent clauses, and a period at the end — or maybe even a semicolon between independent clauses instead? Language experiments from the late 1950s were starting to look like this…

PROCEDURE SOMETASK:

BEGIN

LET X EQUAL Y + Z;

IF X < 10 THEN PRINT "X IS INVALID";

ELSE LET V = 3

END.That’s not quite English, but it’s a lot more English-like than IF (X .LT. 10) could ever hope to be. Lines didn’t matter here; everything could be laid out however you wanted it, and indentation was just for convenience. Even if you wrote it all on one line, the intent was pretty clear, and vaguely sentence-like:

PROCEDURE SOMETASK: BEGIN LET X EQUAL Y + Z; IF X < 10 THEN PRINT "X IS INVALID"; ELSE LET V = 3 END.Importantly, semicolons were statement separators here, not terminators — the inspiration was really English, and the goal was to get as far away from punch cards and Fortran as possible.

The Algol ’60 committee eventually settled on a design that was very similar to the code above, and semicolon was enshrined into programming languages for the very first time:

BEGIN DISPLAY("HELLO WORLD!") END.

BEGIN

FILE F(KIND=REMOTE);

EBCDIC ARRAY E[0:11];

REPLACE E BY "HELLO WORLD!";

WRITE(F, *, E);

END.Not everybody was on board with this. In Elliott 803 Algol, for example, you used tick marks to end statements, not semicolons:

BEGIN

PRINT PUNCH(3),££L??'

B := SIN(A)'

C := COS(A)'

PRINT PUNCH(3),SAMELINE,ALIGNED(1,6),A,B,C'

END'A lot of early computers had very limited character sets, and the Elliott 803 simply didn’t have a semicolon! This wasn’t uncommon: The Algol language specification actually required a number of characters that don’t exist on keyboards even today, like ÷ and ≠ and ↑ — everything was still very experimental in those days, and a lot of keyboards and printers were repurposed parts from typewriters, and just because my computer had a ≠ key didn’t mean yours did. We didn’t standardize on character sets like EBCDIC until 1963, or ASCII until the late 1960s, and Unicode was still decades away. Given the limitations of the day, having a language like Algol that was even vaguely similar between computers was considered to be a major advance.

So for the first time, we had semicolons between our statements, more-or-less. It wasn’t always consistent, and there were caveats and quirks, but the free formatting that semicolons provided let you write far prettier-looking code than fixed formatting ever did, and it was a natural evolution of the languages of the day.

(* Why English? Most of the early work in computer science was being done in the United States, and English is the lingua franca here. Sorry, rest-of-the-world: You didn’t start to heavily influence the history of computing until the 1970s.)

C

In 1970, Ken Thompson wanted to play a video game.

Well, that might not be exactly right, but it makes for a good story. Either way, Thompson really wanted to take some software he’d been using on the MULTICS operating-system project, which had just been cancelled, and keep using it. Pairing up with another programmer at Bell Labs named Dennis Ritchie, he managed to cajole the Bell Labs leadership into letting him use a clunky old PDP-7 computer upstairs in the attic. They were told to make a word processor for it, so that the secretary pool could use it for typing up documents, and they knew that to make it usable for anything at all, it would need an operating system.

(courtesy Wikipedia)

So Thompson did what any good coder would do and started hacking together a quick and dirty OS for it in assembly language.

Meanwhile, Ritchie had been working on a little mini programming language. A language named CPL (“Combined Programming Language”) had been created based on a lot of Algol 60’s ideas, but CPL was huge and complex. Martin Richards had recently taken CPL and stripped it down to a smaller subset called BCPL (“Basic CPL”), but BCPL was still too big for the little computers that Ritchie was working on, so Ritchie stripped out almost everything and called his new language B.

The B compiler was incredibly simplistic. Ritchie didn’t have a lot of space to work in, so even though CPL had gotten rid of semicolons because they were slightly “ugly” in the minds of many programmers, Ritchie needed the semicolons to make a working, simple compiler that could fit into the tiny tiny memory of the computers he was using. It could only make one forward pass through the source code. It didn’t support multiple data types — only integers! It didn’t have the storage capacity to properly figure out where statements ended — it needed the programmer to tell it!

So in B, semicolons didn’t separate statements. They ended statements, and every statement had to end with a semicolon or the compiler would just get confused and produce garbage output.

Thompson started reimplementing his little OS in B, but B was a little too oversimplified, so Ritchie added back in a very few features — structs, a simple pointer syntax, and separate types for integers and characters — and in 1972, he bumped the name up from “B” to “C.” Thompson then rewrote the rest of his “Unix” kernel in C.

And Unix took off. Primarily because it was free.

In those days, the operating system was typically sold with the computer by the manufacturer. You’d buy a big IBM System/360 mainframe for $10 million, and of course for that kind of price tag IBM would bundle in an OS to make the thing usable.

(courtesy Wikipedia)

But in the 1970s, there was a growing class of “minicomputers,” computers that only cost a few tens of thousands or a few hundred thousand dollars, and these sometimes would be sold without an OS. You could make your own, or you could buy it separately.

Or you could get a copy of Unix.

Bell Labs, where Unix had been created, was owned by AT&T, which was a government-run monopoly at the time. And because it was owned by the government, everything they produced was funded by taxpayer dollars — and therefore belonged to everyone. Thompson and Ritchie were happy to give away tapes with early copies of C and Unix on them, and colleges and universities across the United States were happy to receive it — the only thing they had to spend money on was a computer itself, and they could save tens of thousands of dollars by just using Unix instead of buying the computer vendor’s OS.

Thus the C-style semicolon — a statement terminator — began to spread far and wide. Meanwhile, Niklaus Wirth‘s new Pascal teaching language used semicolons the Algol way, as statement separators (Pascal was designed as a stripped down Algol, specifically suitable for teaching); and for a while, the two models competed on even footing. C ran on all the minicomputers at your college, and Pascal was taught in your classes, and so every engineering student was often exposed to both. A whole new generation of programmers grew up thinking that semicolons were a perfectly normal and even necessary part of every program.

A Grammatical Aside

Of course, semicolons weren’t necessary. C only used them because the compiler wasn’t smart enough to figure out how to compile without them. Pascal only used them following the Algol tradition, where the program was supposed to look like an English sentence, and even end with a period.

But let’s talk a little more about why C wouldn’t work without them.

In C, any expression can be a valid statement if you end it with a semicolon. This actually makes some sense, given its history: C is a programming language that was originally pared back too far, to become effectively unusable — and then with just a very few features added back in to make it usable again. Other languages had special rules about what constituted a valid statement, but C didn’t go that far. You could write this, and the compiler would happily compile it for you:

x;Does that do anything? No! It just gets the value of X — and then throws it away. But any expression is a valid statement, so if X was legal anywhere, it was legal as a statement too. All of the statements below are completely legal in C, even if they don’t do anything especially useful:

f;

(g);

-h;

a+b;In each case, we get a value, or maybe compute a value, and then we throw it away, because we don’t use an = to put it somewhere. You don’t need an = for an expression to be valid, so in C, you don’t need it for a statement to be valid either.

But let’s look at those first three lines, and let’s pretend semicolons were optional in C, or had been left out altogether. That would mean you could legally write this:

f

(g)

-hSame thing, right? Or is it?

Remember, the computer doesn’t see lines like you do. Code isn’t two-dimensional as far as the computer is concerned. Line breaks mean nothing. The computer just sees this:

f (g) -hAnd without the semicolons, now this code has a really severe problem. Should the computer read from f, and then g, and then calculate -h, and then throw all three values away? Or should the computer call f(g) and then subtract h from the result?

The answer is that both answers could be right. This is what’s called an ambiguous parse: We don’t know what it’s supposed to mean, because it could mean multiple different things.

Here’s an even worse example:

*x = z

*y = wIf C didn’t have semicolons, the compiler would fail on this code! Why? Because the compiler doesn’t see the line breaks — instead, it sees this:

*x = z *y = wThe original appears to be a couple of simple pointer assignments. The latter, however, looks to the compiler like you wrote z * y in the middle of it — that’s multiplication, not pointers, and a broken assignment at the end! The entire statement is gibberish to the compiler without having the semicolons to disambiguate it.

In C, then, the semicolons are necessary, because without them, the language is so loose that the compiler literally doesn’t know what you mean.

Notably, C++, which inherits C’s syntax, has a misfeature called the most vexing parse — even with the semicolons included, there are certain arrangements of type names, identifier names, and parentheses that could have multiple possible meanings, and the compiler literally just picks one by an arbitrary rule.

JavaScript, which inherits C’s syntax, tried to do away with semicolons via something called automatic semicolon insertion, where it “invisibly” adds the semicolons back in for you if you leave them out, but JavaScript sometimes gets this wrong. For example, the JavaScript code below doesn’t do what it looks like it does:

return

5Thanks to inheriting C’s “all expressions are valid statements” rule, and automatic semicolon insertion, a JavaScript interpreter will treat that code as though you wrote this instead:

return;

5;That code actually returns undefined and never bothers to calculate the 5 or do anything useful with it! As JavaScript shows all too well, in C-style super-loose languages, leaving out the semicolons can actually be a disaster waiting to happen.

The Scripting Era

Importantly, the languages before Algol didn’t use semicolons, and their traditions didn’t simply die just because the Algol family got popular. For example, Lisp has semicolons, but it uses them for comments — just like so many of the assemblers did back in the 1950s.

(defun f (x y) ; Let's make a function

(+ x y)) ; that returns x plus y.Matlab uses semicolons to separate rows in matrices:

A = [1 2 0; 2 5 -1; 4 10 -1]And, notably, scripting languages like Ruby and Python and Lua don’t use semicolons at all.

Why not?

Remember the two reasons why the semicolons were added to programming languages in the first place:

- First, because of philosophy, because people wanted code to read like English; and

- Second, because of ambiguity, because in some languages, you need the programmer to explicitly tell the computer where things start or end.

Philosophy is an easy thing to work around — you just have to believe something different! So the only remaining question is: Is there a technical reason why you must have semicolons? Is C’s syntax the only way to do it?

The answer is a resounding “no!” You can readily design a language in which semicolons aren’t needed at all, and Lua is a great example here:

-- defines a factorial function

function fact(n)

if n == 0 then

return 1

else

return n * fact(n-1)

end

end

print("enter a number:")

a = io.read("*number") -- read a number

print(fact(a))Lua is designed so that there’s no such thing as an “ambiguous parse” — even if the code above were written on one line, there’s exactly one way to interpret it. Indentation is optional, whitespace is optional — you can format the code however you like and it will still work.

Of course, in exchange, Lua has keywords like end that you use to tell the language where things end. Ruby does the same thing, albeit with a lot more punctuation. (Lua is more “wordy,” relying heavily on keywords; Ruby is more “symbolic,” relying on punctuation and symbols to perform similar operations.) We still have to help the computer in some places to understand where things begin and end, but in these languages, we don’t use semicolons to do it, and the languages go to some design effort to avoid us having to explicitly state the ends of things when possible. (It’s usually at the end of blocks of code.)

In other languages, like Python and Haskell, you use significant whitespace instead of semicolons. They’re in some ways a modern return to the punchcard era, where the position of the code matters. In Python, you don’t need an end keyword to describe the end of something, but you definitely need to get the indentation right, or the computer won’t know where things start and end:

def fact(n):

if n == 0:

return 1

else:

return n * fact(n-1)

a = input("enter a number:")

print(fact(int(a)))Here, you can’t just break lines anywhere, either: Python assumes that line endings mean statement endings, just like in the Fortran and COBOL days. (If you want to wrap lines because they’re too long, you’ll have to wrap them in parentheses: Inside parentheses in Python, you can use spacing however you like.)

In languages with significant whitespace, mis-indentation can cause trouble. This program looks nearly identical to the one above, but it’s simply broken and won’t run at all; can you see why?

def fact(n):

if n == 0:

return 1

else:

return n * fact(n-1)

a = input("enter a number:")

print(fact(int(a)))The Modern Semicolon

So after all that, why do we still have semicolons in our languages? Are they just a holdover from the Bad Old Days when computers were small and stupid? Are they just tradition, a philosophy we’ve carried for 50 years just because everyone is used to them? Or is there still some value that they bring to the languages that include them?

TypeScript and Rust, for example, are modern languages that were created with semicolons. C# and Java are a little older, but they still have semicolons too. So why do we still keep making languages with semicolons, especially if you can just design your fancy new language without any ambiguous syntax in it? Lua and Ruby and Python and Haskell don’t need them; so do they really still serve a purpose?

Yes!

Okay, we’ve firmly crossed into “opinion” territory now, but I’m going to make an argument that in a free-formatted language, semicolons still offer some value. (In “significant whitespace” languages, like Python, they don’t offer much: This argument applies to true free-formatted languages only.)

Let’s look at C#. C# is in the C family, to be sure. But Anders Hejlsberg, its creator, spent most of his career before C# working on Pascal compilers, and Pascal has a very different philosophy than C does about semicolons (and about a lot of other things). He was surely aware of other languages that didn’t have semicolons at all when he started designing C#, but he still chose to include them, and in the C style, no less — as statement terminators, not separators (like in Pascal). Why would he do that when he could just as easily have left them out altogether?

(courtesy Wikipedia)

The answer is likely intent — specifically, the intent of the programmer.

Let’s look at a good example to see why semicolons might be a virtue and not a sin:

if (x < y)

a = f(

g(y))Oops, while we were writing this code, we forgot to finish the if-statement on the first line. It was probably going to end with something like a = f(x).

Probably.

But — maybe it was supposed to have ended with a = f. Or maybe it was supposed to be a = f(g(y)) instead. There’s no way to be sure. Without semicolons, it’s really hard to tell what was supposed to happen here. The compiler can tell us we got it wrong (actually in this case, it won’t even tell us it was wrong, because that’s actually legitimate code!), but the compiler can’t tell us anything about how to fix it, because it doesn’t know what a fix might look like.

Now let’s look at the same code, but with semicolons:

if (x < y)

a = f(;

g(y));Here, the intent is a lot more obvious. The programmer likely mistyped the first ( and last ), and the compiler can tell them that. Or if the semicolons look like this instead —

if (x < y)

a = f(

g(y));Here, the code will actually compile and work correctly, because the intent is obvious — the programmer accidentally pressed the Enter key after the first (.

Modern languages include semicolons as a defense against programmer errors. Semicolons can help to isolate mistakes, and they help ensure that errors get detected by the compiler and reported so that you can fix them. Yes, it’s annoying when the compiler tells you that you forgot a semicolon, especially if you believe it could just insert the missing semicolon by itself — but what the compiler is really asking you is, “I think I understand, but is this code really what you intended?” The compiler could fix it, but it’s far safer for you to say what you mean than for the compiler to guess — and for it to guess wrong and silently introduce a bug in your code.

Wrapping Up;

So with all that, let’s finally go back and answer the original questions:

- Why do some languages end statements with semicolons?

Partially tradition, partially philosophy, partially because they reduce bugs in free-formatted code. - Why do other languages explicitly not end statements with semicolons?

In some languages, like Python, they simply don’t make sense; in others, there are alternate terminators likeend. - Why do some languages require them but it seems like the compiler could just “figure it out,” since it seems to know when you’ve forgotten them?

The computer can usually tell if you’re wrong, but telling you how to do it right? That’s a lot harder and not always obvious to it. - Why are they optional in some languages?

In JavaScript, this is a misfeature, an accident. In other languages, they don’t do anything at all, or don’t appear to except in special cases (like Ruby). - And, of all things, why the weird shape that is the semicolon?

Because English used them between statements before computers did; and that’s all there is to say about that*.

(*Not really. There’s always more to the story.)